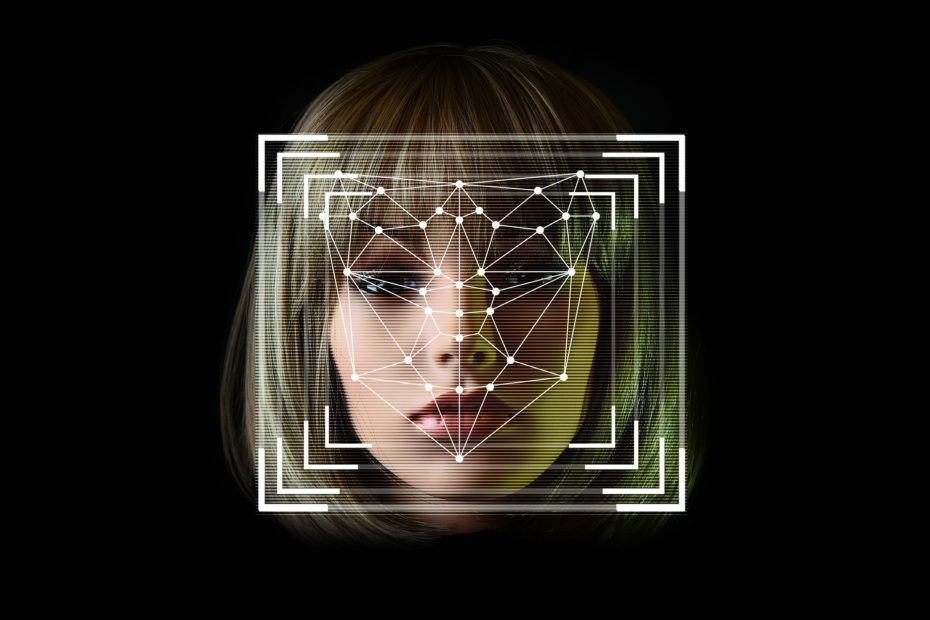

Clearview AI is possibly one of the biggest threats to privacy you’ve never heard of: a facial recognition tool powered by more than 3 billion images from every corner of the internet. The tool allows users to upload a picture of any individual, sending back search results of every publicly available image of that person, as well as links to the websites where those images were uploaded.

This capability allows Clearview AI to provide users with a comprehensive profile of anyone who has a publicly accessible image of themselves online. Clearview AI scrapes these images from the internet without consent from the individuals in question, and in many cases in active violation of terms of service. The company keeps all images on file – from images that have been uploaded without your consent, to images that you have deleted.

So, who uses this software? Unsurprisingly law enforcement agencies are some of Clearview’s biggest clients. Immigrations and Customs Enforcement; the U.S Attorney’s Office for the Southern District of New York; the FBI, Customs and Border Protection and hundreds of police departments across the US all have contracts with Clearview. UK police have also reportedly tried out Clearview’s technology.

The company has actively marketed its wares to police departments across the US, offering free trials to departments with significant uptake. The Miami Police Department, San Mateo County Sheriff’s Office, Philadelphia Police Department and the Indiana State Police alone have collectively run over 12,000 searches. Police department’s do not need a warrant to search Clearview’s database – this despite the fact Clearview’s technology gives police forces access to far more images than government databases.

Armed with such a powerful facial recognition tool, police departments will be able to identify and track individuals without their knowledge. Even the mere threat of surveillance may discourage people from speaking out or attending protests. These alarming possibilities are not hypothetical. In recent protests over the killing of George Floyd, the Minneapolis Police Department used Clearview AI to identify protesters; this is a direct threat to democracy and the right of individuals to peacefully protest.

These are not the only worries we should have about Clearview AI. Ties between Clearview founder Hoan Ton-That, as well as several employees, to the far-right, does little to assuage fears over how Clearview’s technology may be weaponised. Lawmakers have also raised concerns over the exporting of Clearview technology to authoritarian regimes with abysmal human rights records. As if concerns over Clearview’s infringement into privacy could not get any worse, reports that Clearview was hacked suggests that the data they hold is not sufficiently secure. This is especially worrisome given that law enforcement agencies are apparently uploading sensitive photos to the database. All in all, it’s a pretty long list of failures on the part of Clearview’s management

Clearview AI could quite possibly spell the end of public anonymity as we know it. Their technology makes it easy to identify individuals and track their movements in an unprecedented way. With just one photo law enforcement can access an incredible amount of information using Clearview AI’s facial recognition software. This specifically imperils vulnerable individuals; victims of trafficking, undocumented immigrants, survivors of domestic violence or sexual assault. It allows mere suspicion or even curiosity on the part of law enforcement to be the basis of a comprehensive facial recognition search.

Clearview AI has stolen our photos and engineered the theft of our privacy.

#Clearview AI No Tech for U

And to all the police departments and law enforcement agencies that use your technology @MinneapolisPD @SMCSheriff @PhillyPolice @IndStatePolice

No Tech for U.